《英文座右铭大全》简介:

1.A bad workman always blames his tools. 拙匠总怪工具差。

《英文座右铭大全》正文开始>> 1.A bad workman always blames his tools. 拙匠总怪工具差。

2.A contented mind is a perpetual feast. 知足长乐。

3.A good beginning is half the battle. 好的开端等于成功一半。

4.A little pot is soon hot. 壶小易热,量小易怒。

5.All lay loads on a willing horse. 好马重负。

6.A merry heart goes all the way. 心情愉快,万事顺利 。

7.Bad excuses are worse than none. 狡辩比不辩护还糟 。

8.Character is the first and last word in the success circle. 人的品格是事业成功的先决条件。

9.Cleanliness is next to godliness. 整洁近于美德 。

10.Courtesy costs nothing. 彬彬有礼,惠而不费 。

11.Doing nothing is doing ill. 无所事事,必干坏事。

12.Early to bed, early to rise, make a man healthy, wealthy, and wise.

睡得早,起得早,聪明、富裕、身体好 。

13.Empty vessels make the most noise. 满瓶子不响,半瓶子晃荡 。

14.Every man hath his weak side. 人皆有弱点 。

15.Everything ought to be beautiful in a human being: face, dress, soul and idea.

人的一切都应当是美丽的:容貌、衣着、心灵和思想。

16.Extremes are dangerous. 凡事走向极端是危险的 。

17.Good advice is harsh to the ear. 忠言逆耳 。

18.Grasp all, lose all. 欲尽得,必尽失 。

19.Great hopes make great men. 伟大的理想造就伟大的人物。

20.Handsome is he who does handsomely. 行为美者才真美。

21.Have but few friends, though many acquaintances. 结交可广,知己宜少。

22.Hear all parties.兼听则明,偏听则暗 。

23.He is a wise man who speaks little. 智者寡言。

24.He is not laughed at that laughs at himself first.

有自知之明者被人尊敬。

25.He is rich enough that wants nothing.

无欲者最富有,贪欲者最贫穷。

26.He is truly happy who makes others happy.

使他人幸福的人,是真正的幸福。

27.Honesty is the best policy. 诚实乃上策。

28.Hope for the best and prepare for the worst.抱最好的希望,作最坏的准备。

29.Idleness is the root of al/fanwen/l evil. 懒惰是万恶之源 。

30.If we dream, everything is possible. 敢于梦想,一切都将成为

可能。

31.Kind hearts are the gardens, kind thoughts are the roots, kind words are flowers and kind deeds are the fruits.

仁慈的心田是花园,崇高的思想是根茎,友善的言语是花朵,良好的行为是果实。

32.Laugh, and the world laughs with you; Weep, and you weep lone.

欢笑,整个世界伴你欢笑。哭泣,只有你独自向隅而泣 。

33.Life is measured by thought and action not by time.

衡量生命的尺度是思想和行为,而不是时间。

34.Life is not all beer and skittles. 人生并非尽是乐事 。

35.Long absent, soon forgotten. 别久情疏 。

36.Look before you leap. 三思而后行 。

37.Lookers-on see most of the game. 旁观者清,当局者迷。

38.Manners make the man.观其待人而知其人 。

39.Misfortune tests the sincerity of friends. 患难识知交。

40.No cross, no crown.没有苦难,就没有快乐 。

41.Nobody's enemy but his own. 自寻苦恼 。

42.One man's fault is another man's lesson. 前车之覆,后车之鉴 。

43.Pardon all men, but never thyself. 严以律已,宽以待人。

44.Reason is the guide and light of life. 理智是人生的灯塔 。

45.Sadness and gladness succeed one another. 乐极生悲,苦尽甘来 。

46.Still waters run deep.流静水深,人静心深 。

47.The fire is the test of gold; adversity of strong men. 烈火炼真金,逆境炼壮士 。

48.The fox may grow grey, but never good. 江山易改,本性难移 。

49.The more a man learns, the more he sees his ignorance. 知识越广博,越感已无知 。

50.Virtue is a jewel of great price. 美德是无价之宝 。

51.Weak things united become strong. 一根筷子易折断,十根筷子硬如铁 。

52.We can't judge a person by what he says but by what he does.

判断一个人,不听言语看行动 。

53.Where there is a will there is a way. 有志者,事竟成 。

54.Will is power. 意志就是力量 。

55.Wise men are silent; fools talk. 智者沉默寡言,愚者滔滔不绝 。

56.Wise men learn by others' harm, fools by their own.

智者以他人挫折为鉴,愚者必自身碰壁方知觉。

1. Pain past is pleasure.

(过去的痛苦就是快乐。)[无论多么艰难一定要咬牙

冲过去,将来回忆起来一定甜蜜无比。]

2. While there is life, there is hope.

(有生命就有希望/留得青山在,不怕没柴烧。)

3. Wisdom in the mind is better than money in the hand.

(脑中有知识,胜过手中有金钱。)[从小灌输给孩子的坚定信念。]

4. Storms make trees take deeper roots.

(风暴使树木深深扎根。)[感激敌人,感激挫折!]

5. Nothing is impossible for a willing heart.

(心之所愿,无所不成。)[坚持一个简单的信念就一定会成功。]

6. The shortest answer is doing.

(最简单的回答就是干。)[想说流利的英语吗?那么现在就开口!心动不如嘴动。]

7. All things are difficult before they are easy.

(凡事必先难后易。)[放弃投机取巧的幻想。]

8. Great hopes make great man.

(伟大的理想造就伟大的人。)

9. God helps those who help themselves.

(天助自助者。)

10. Four short words sum up what has lifted most successful individuals above the crowd: a little bit more.

(四个简短的词汇概括了成功的秘诀:多一点点!)[比别人多一点努力、多一点自律、多一点决心、多一点反省、多一点学习、多一点实践、多一点疯狂,多一点点就能创造奇迹!]

11. In doing we learn.

(实践长才干。)

12. East or west, home is best.

(东好西好,还是家里最好。)

13. Two heads are better than one.

(三个臭皮匠,顶个诸葛亮。)

14. Good company on the road is the shortest cut.

(行路有良伴就是捷径。)

15. Constant dropping wears the stone.

(滴水穿石。)

16. Misfortunes never come alone/single.

(祸不单行。)

17. Misfortunes tell us what fortune is.

(不经灾祸不知福。)

18. Better late than never.

(迟做总比不做好;晚来总比不来好。)

19. It's never too late to mend.

(过而能改,善莫大焉;亡羊补牢,犹未晚也。)

20. If a thing is worth doing it is worth doing well.

(如果事情值得做,就值得做好。)

21. Nothing great was ever achieved without enthusiasm.

(无热情成就不了伟业。)

22. Actions speak louder than words.

(行动比语言更响亮。)

23. Lifeless, faultless.

(只有死人才不犯错误。)

24. From small beginning come great things.

(伟大始于渺小。)

25. One today is worth two tomorrows.

(一个今天胜似两个明天。)

26. Truth never fears investigation.

(事实从来不怕调查。)

27. The tongue is boneless but it breaks bones.

(舌无骨却能折断骨。)

28. A bold attempt is half success.

(勇敢的尝试是成功的一半。)

29. Knowing something of everything and everything of something. (通百艺而专一长。)[疯狂咬舌头]

30. Good advice is beyond all price.

(忠告是无价宝。)

第二篇:英文文献

In the previous chapters we explained schema optimization and indexing, which are necessary for high performance. But they aren’t enough—you also need to design your queries well. If your queries are bad, even the best-designed schema and indexes willnot perform well.

Query optimization, index optimization, and schema optimization go hand in hand.As you gain experience writing queries in MySQL, you will learn how to design tablesand indexes to support efficient queries. Similarly, what you learn about optimal schema design will influence the kinds of queries you write. This process takes time,so we encourage you to refer back to these three chapters as you learn more.

This chapter begins with general query design considerations—the things you should consider first when a query isn’t performing well. We then dig much deeper into query optimization and server internals. We show you how to find out how MySQL executes a particular query, and you’ll learn how to change the query execution plan. Finally, we’ll look at some places MySQL doesn’t optimize queries well and explore query optimization patterns that help MySQL execute queries more efficiently.

Our goal is to help you understand deeply how MySQL really executes queries, so you

can reason about what is efficient or inefficient, exploit MySQL’s strengths, and avoid its weaknesses.

Why Are Queries Slow?

Before trying to write fast queries, remember that it’s all about response time. Queries are tasks, but they are composed of subtasks, and those subtasks consume time. To optimize a query, you must optimize its subtasks by eliminating them, making them happen fewer times, or making them happen more quickly.

What are the subtasks that MySQL performs to execute a query, and which ones are slow? The full list is impossible to include here, but if you profile a query as we showed in Chapter 3, you will find out what tasks it performs. In general, you can think of a query’s lifetime by mentally following the query through its sequence diagram from the client to the server, where it is parsed, planned, and executed, and then back again to the client. Execution is one of the most important stages in a

query’s lifetime. It involves lots of calls to the storage engine to retrieve rows, as well as post-retrieval operations such as grouping and sorting.

While accomplishing all these tasks, the query spends time on the network, in the CPU,in operations such as statistics and planning, locking (mutex waits), and most especially, calls to the storage engine to retrieve rows. These calls consume time in memory operations, CPU operations, and especially I/O operations if the data isn’t in

memory.Depending on the storage engine, a lot of context switching and/or system calls might also be involved.

In every case, excessive time may be consumed because the operations are performed needlessly, performed too many times, or are too slow. The goal of optimization is to avoid that, by eliminating or reducing operations, or making them faster.

Again, this isn’t a complete or accurate picture of a query’s life. Our goal here is to show the importance of understanding a query’s lifecycle and thinking in terms of where the time is consumed. With that in mind, let’s see how to optimize queries.

Slow Query Basics: Optimize Data Access

The most basic reason a query doesn’t perform well is because it’s working with too much data. Some queries just have to sift through a lot of data and can’t be helped. That’s unusual, though; most bad queries can be changed to access less data. We’ve found it useful to analyze a poorly performing query in two steps:

1. Find out whether your applicationis retrieving more data than you need. That usually means it’s accessing too many rows, but it might also be accessing too many columns.

2. Find out whether the MySQL serveris analyzing more rows than it needs.

Are You Asking the Database for Data You Don’t Need?Some queries ask for more data than they need and then throw some of it away. This demands extra work of the MySQL server, adds network overhead,and consumes memory and CPU resources on the application server.

Here are a few typical mistakes:

Fetching more rows than needed

One common mistake is assuming that MySQL provides results on demand, rather than calculating and returning the full result set. We often see this in applications designed by people familiar with other database systems. These developers are used to techniques such as issuing a SELECT statement that returns many rows, then fetching the first N rows and closing the result set (e.g., fetching the 100 most recent articles for a news site when they only need to show 10 of them on the front

page).They think MySQL will provide them with these 10 rows and stop executing the query, but what MySQL really does is generate the complete result set. The client library then fetches all the data and discards most of it. The best solution is to add a LIMIT clause to the query.

Fetching all columns from a multitable join

If you want to retrieve all actors who appear in the film Academy Dinosaur, don’t write the query this way:

mysql> SELECT * FROM sakila.actor

-> INNER JOIN sakila.film_actor USING(actor_id)

-> INNER JOIN sakila.film USING(film_id)

-> WHERE sakila.film.title = 'Academy Dinosaur';

That returns all columns from all three tables. Instead, write the query as follows: mysql> SELECT sakila.actor.* FROM sakila.actor...;

Fetching all columns

You should always be suspicious when you see SELECT *. Do you really need all columns? Probably not. Retrieving all columns can prevent optimizations such as covering indexes, as well as adding I/O, memory, and CPU overhead for the server. Some DBAs ban SELECT *universally because of this fact, and to reduce the risk of problems when someone alters the table’s column list.

Of course, asking for more data than you really need is not always bad. In many cases we’ve investigated, people tell us the wasteful approach simplifies development, because it lets the developer use the same bit of code in more than one place. That’s a reasonable consideration, as long as you know what it costs in terms of performance. It might also be useful to retrieve more data than you actually need if you use some type of caching in your application, or if you have another benefit in mind. Fetching and caching full objects might be preferable to running many separate queries that retrieve only parts of the object.

Fetching the same data repeatedly

If you’re not careful, it’s quite easy to write application code that retrieves the same data repeatedly from the database server, executing the same query to fetch it. For example, if you want to find out a user’s profile image URL to display next to a list of comments, you might request this repeatedly for each comment. Or you could cache it the first time you fetch it, and reuse it thereafter. The latter approach is much more efficient.

Is MySQL Examining Too Much Data?

Once you’re sure your queries retrieve only the data you need, you can look for

queries that examine too much data while generating results. In MySQL, the simplest query cost metrics are:

? Response time

? Number of rows examined

? Number of rows returned

None of these metrics is a perfect way to measure query cost, but they reflect roughly how much data MySQL must access internally to execute a query and translate ap- proximately into how fast the query runs. All three metrics are logged in the slow query log, so looking at the slow query log is one of the best ways to find queries that examine too much data.

Response time

Be ware of taking query response time at face value. Hey, isn’t that the opposite of what we’ve been telling you? Not really. It’s still true that response time is what matters, but it’s a bit complicated.

Response time is the sum of two things: service time and queue time. Service time is how long it takes the server to actually process the query. Queue time is the portion of response time during which the server isn’t really executing the query—it’s waiting for something, such as waiting for an I/O operation to complete, waiting for a row lock,and so forth. The problem is, you can’t break the response time down into these components unless you can measure them individually, which is usually hard to do. In general, the most common and important waits you’ll encounter are I/O and lock waits,but you shouldn’t count on that, because it varies a lot.

As a result, response time is not consistent under varying load conditions. Other factors—such as storage engine locks (table locks and row locks), high

concurrency,and hardware—can also have a considerable impact on response times. Response time can also be both a symptom and a cause of problems, and it’s not always obvious which is the case, unless you can use the techniques shown in “Single-Query Versus Server-Wide Problems” on page 93 to find out.

When you look at a query’s response time, you should ask yourself whether the response time is reasonable for the query. We don’t have space for a detailed

explanation in this book, but you can actually calculate a quick upper-bound estimate (QUBE) of query response time using the techniques explained in Tapio Lahdenmaki and Mike Leach’s book Relational Database Index Design and the Optimizers (Wiley). In a nutshell: examine the query execution plan and the indexes involved, determine how many sequential and random I/O operations might be required, and multiply

these by the time it takes your hardware to perform them. Add it all up and you have a yardstick to judge whether a query is slower than it could or should be.

Rows examined and rows returned

It’s useful to think about the number of rows examined when analyzing queries, because you can see how efficiently the queries are finding the data you

need.However, this is not a perfect metric for finding “bad” queries. Not all row

accesses are equal. Shorter rows are faster to access, and fetching rows from memory

is much faster than reading them from disk.

Ideally, the number of rows examined would be the same as the number returned, but in practice this is rarely possible. For example, when constructing rows with joins, the server must access multiple rows to generate each row in the result set. The ratio of rows examined to rows returned is usually small—say, between 1:1 and 10:1—but sometimes it can be orders of magnitude larger.

Rows examined and access types

When you’re thinking about the cost of a query, consider the cost of finding a single row in a table. MySQL can use several access methods to find and return a row. Some require examining many rows, but others might be able to generate the result without examining any.

The access method(s) appear in the type column in EXPLAIN’s output. The access types range from a full table scan to index scans, range scans, unique index lookups, and constants. Each of these is faster than the one before it, because it requires reading less data. You don’t need to memorize the access types, but you should understand the general concepts of scanning a table, scanning an index, range accesses, and single value accesses.

If you aren’t getting a good access type, the best way to solve the problem is usually by

adding an appropriate index. We discussed indexing in the previous chapter; now you can see why indexes are so important to query optimization. Indexes let MySQL find rows with a more efficient access type that examines less data.

For example, let’s look at a simple query on the Sakila sample database:

mysql> SELECT * FROM sakila.film_actor WHERE film_id = 1;

This query will return 10 rows, and EXPLAIN shows that MySQL uses the ref access type

on the idx_fk_film_id index to execute the query:

mysql> EXPLAIN SELECT * FROM sakila.film_actor WHERE film_id = 1\G *************************** 1. row *************************** id: 1

select_type: SIMPLE

table: film_actor

type: ref

possible_keys: idx_fk_film_id

key: idx_fk_film_id

key_len: 2

ref: const

rows: 10

Extra:

EXPLAIN shows that MySQL estimated it needed to access only 10 rows. In other words,the query optimizer knew the chosen access type could satisfy the query

efficiently.What would happen if there were no suitable index for the query? MySQL would have to use a less optimal access type, as we can see if we drop the index and run the query again:

mysql> ALTER TABLE sakila.film_actor DROP FOREIGN KEY

fk_film_actor_film;

mysql> ALTER TABLE sakila.film_actor DROP KEY idx_fk_film_id;

mysql> EXPLAIN SELECT * FROM sakila.film_actor WHERE film_id = 1\G *************************** 1. row *************************** id: 1

select_type: SIMPLE

table: film_actor

type: ALL

possible_keys: NULL

key: NULL

key_len: NULL

ref: NULL

rows: 5073

Extra: Using where

Predictably, the access type has changed to a full table scan (ALL), and MySQL now estimates it’ll have to examine 5,073 rows to satisfy the query. The “Using where” in the Extra column shows that the MySQL server is using the WHERE clause to discard rows after the storage engine reads them.

In general, MySQL can apply a WHERE clause in three ways, from best to worst: ? Apply the conditions to the index lookup operation to eliminate nonmatching rows. This happens at the storage engine layer.

? Use a covering index (“Using index” in the Extra column) to avoid row accesses,and filter out nonmatching rows after retrieving each result from the index. This happens at the server layer, but it doesn’t require reading rows from the table.

? Retrieve rows from the table, then filter nonmatching rows (“Using where” in the Extra column). This happens at the server layer and requires the server to read rows from the table before it can filter them.

This example illustrates how important it is to have good indexes. Good indexes help your queries get a good access type and examine only the rows they need.

However,adding an index doesn’t always mean that MySQL will access and return the same number of rows. For example, here’s a query that uses the COUNT() aggregate function:

mysql> SELECT actor_id, COUNT(*) FROM sakila.film_actor GROUP BY

actor_id;

This query returns only 200 rows, but it needs to read thousands of rows to build the result set. An index can’t reduce the number of rows examined for a query like this one.

Unfortunately, MySQL does not tell you how many of the rows it accessed were used to build the result set; it tells you only the total number of rows it accessed. Many of these rows could be eliminated by a WHERE clause and end up not contributing to the result set. In the previous example, after removing the index on sakila.film_actor, the query accessed every row in the table and the WHERE clause discarded all but 10 of them.Only the remaining 10 rows were used to build the result set. Understanding how many rows the server accesses and how many it really uses requires reasoning about the query.

If you find that a huge number of rows were examined to produce relatively few rows in the result, you can try some more sophisticated fixes:

? Use covering indexes, which store data so that the storage engine doesn’t have to retrieve the complete rows. (We discussed these in the previous chapter.)

? Change the schema. An example is using summary tables (discussed in Chapter 4). ? Rewrite a complicated query so the MySQL optimizer is able to execute it optimally. (We discuss this later in this chapter.)

Ways to Restructure Queries

As you optimize problematic queries, your goal should be to find alternative ways to get the result you want—but that doesn’t necessarily mean getting the same result set back from MySQL. You can sometimes transform queries into equivalent forms that return the same results, and get better performance. However, you should also think about rewriting the query to retrieve different results, if that provides an efficiency benefit. You might be able to ultimately do the same work by changing the application code as well as the query. In this section, we explain techniques that can help you restructure a wide range of queries and show you when to use each technique.

Complex Queries Versus Many Queries

One important query design question is whether it’s preferable to break up a complex query into several simpler queries. The traditional approach to database design emphasizes doing as much work as possible with as few queries as possible. This approach was historically better because of the cost of network communication and the overhead of the query parsing and optimization stages.

However, this advice doesn’t apply as much to MySQL, because it was designed to handle connecting and disconnecting very efficiently and to respond to small and

simple queries very quickly. Modern networks are also significantly faster than they used to be, reducing network latency. Depending on the server version, MySQL can run well over 100,000 simple queries per second on commodity server hardware and over 2,000 queries per second from a single correspondent on a gigabit network, so running multiple queries isn’t necessarily such a bad thing.

Connection response is still slow compared to the number of rows MySQL can traverse per second internally, though, which is counted in millions per second for in-memory data. All else being equal, it’s still a good idea to use as few queries as possible, but sometimes you can make a query more efficient by decomposing it and executing a few simple queries instead of one complex one. Don’t be afraid to do this; weigh the costs,and go with the strategy that causes less work. We show some examples of this technique a little later in the chapter.

That said, using too many queries is a common mistake in application design. For example, some applications perform 10 single-row queries to retrieve data from a table when they could use a single 10-row query. We’ve even seen applications that retrieve each column individually, querying each row many times!

Chopping Up a Query

Another way to slice up a query is to divide and conquer, keeping it essentially the same but running it in smaller “chunks” that affect fewer rows each time.Purging old data is a great example. Periodic purge jobs might need to remove quite a bit of data, and doing this in one massive query could lock a lot of rows for a long time,fill up transaction logs, hog resources, and block small queries that shouldn’t be interrupted. Chopping up the DELETE statement and using medium-size queries can improve performance considerably, and reduce replication lag when a query is replicated. For example, instead of running this monolithic query:

mysql> DELETE FROM messages WHERE created <

DATE_SUB(NOW(),INTERVAL 3 MONTH);

you could do something like the following pseudocode:

rows_affected = 0

do {

rows_affected = do_query(

"DELETE FROM messages WHERE created <

DATE_SUB(NOW(),INTERVAL 3 MONTH)

LIMIT 10000")

} while rows_affected > 0

Deleting 10,000 rows at a time is typically a large enough task to make each query efficient, and a short enough task to minimize the impact on the server(transactional storage engines might benefit from smaller transactions). It might also be a good idea

to add some sleep time between the DELETE statements to spread the load over time and reduce the amount of time locks are held.

Join Decomposition

Many high-performance applications use join decomposition. You can decompose a join by running multiple single-table queries instead of a multitable join, and then performing the join in the application. For example, instead of this single query: mysql> SELECT * FROM tag

-> JOIN tag_post ON tag_post.tag_id=tag.id

-> JOIN post ON tag_post.post_id=post.id

-> WHERE tag.tag='mysql';

You might run these queries:

mysql> SELECT * FROM tag WHERE tag='mysql';

mysql> SELECT * FROM tag_post WHERE tag_id=1234;

mysql> SELECT * FROM post WHERE post.id in (123,456,567,9098,8904);

Why on earth would you do this? It looks wasteful at first glance, because you’ve increased the number of queries without getting anything in return. However, such restructuring can actually give significant performance advantages:

? Caching can be more efficient. Many applications cache “objects” that map directly to tables. In this example, if the object with the tag mysql is already cached, the application can skip the first query. If you find posts with an ID of 123, 567, or 9098 in the cache, you can remove them from the IN() list. The query cache might also benefit from this strategy. If only one of the tables changes frequently, decomposing a join can reduce the number of cache invalidations.

? Executing the queries individually can sometimes reduce lock contention.

? Doing joins in the application makes it easier to scale the database by placing tables on different servers.

? The queries themselves can be more efficient. In this example, using an IN() list instead of a join lets MySQL sort row IDs and retrieve rows more optimally than might be possible with a join. We explain this in more detail later.

? You can reduce redundant row accesses. Doing a join in the application means you retrieve each row only once, whereas a join in the query is essentially a

denormalization that might repeatedly access the same data. For the same reason, such restructuring might also reduce the total network traffic and memory usage.

? To some extent, you can view this technique as manually implementing a hash join instead of the nested loops algorithm MySQL uses to execute a join. A hash join might be more efficient. (We discuss MySQL’s join strategy later in this chapter.)

As a result, doing joins in the application can be more efficient when you cache and reuse a lot of data from earlier queries, you distribute data across multiple servers, you replace joins with IN() lists on large tables, or a join refers to the same table multiple

times.

Optimizing Specific Types of Queries

In this section, we give advice on how to optimize certain kinds of queries. We’ve covered most of these topics in detail elsewhere in the book, but we wanted to make a list of common optimization problems that you can refer to easily.

Most of the advice in this section is version-dependent, and it might not hold for future versions of MySQL. There’s no reason why the server won’t be able to do some or all of these optimizations itself someday.

Optimizing COUNT() Queries

The COUNT() aggregate function, and how to optimize queries that use it, is probably one of the top 10 most-misunderstood topics in MySQL. You can do a web search and find more misinformation on this topic than we care to think about.

Before we get into optimization, it’s important that you understand what COUNT() really does.

What COUNT() does

COUNT() is a special function that works in two very different ways: it counts values and rows. A value is a non-NULL expression (NULL is the absence of a value). If you specify a column name or other expression inside the parentheses, COUNT() counts how many times that expression has a value. This is confusing for many people, in part because values and NULL are confusing. If you need to learn how this works in SQL, we suggest a good book on SQL fundamentals. (The Internet is not necessarily a good source of accurate information on this topic.)

The other form of COUNT() simply counts the number of rows in the result. This is what MySQL does when it knows the expression inside the parentheses can never be NULL.The most obvious example is COUNT(*), which is a special form of COUNT() that does not expand the * wildcard into the full list of columns in the table, as you might expect;instead, it ignores columns altogether and counts rows.

One of the most common mistakes we see is specifying column names inside the parentheses when you want to count rows. When you want to know the number of rows in the result, you should always use COUNT(*). This communicates your intention clearly and avoids poor performance.

Myths about MyISAM

A common misconception is that MyISAM is extremely fast for COUNT() queries. It

is fast, but only for a very special case: COUNT(*) without a WHERE clause, which merely counts the number of rows in the entire table. MySQL can optimize this away because the storage engine always knows how many rows are in the table. If MySQL knows col can never be NULL, it can also optimize a COUNT(col) expression by converting it to COUNT(*) internally.

MyISAM does not have any magical speed optimizations for counting rows when the query has a WHERE clause, or for the more general case of counting values instead of rows. It might be faster than other storage engines for a given query, or it might not be.That depends on a lot of factors.

Simple optimizations

You can sometimes use MyISAM’s COUNT(*) optimization to your advantage when you want to count all but a very small number of rows that are well indexed. The following example uses the standard world database to show how you can efficiently find the number of cities whose ID is greater than 5. You might write this query as follows:

mysql> SELECT COUNT(*) FROM world.City WHERE ID > 5;

If you examine this query with SHOW STATUS, you’ll see that it scans 4,079 rows. If you negate the conditions and subtract the number of cities whose IDs are less than or equal to 5 from the total number of cities, you can reduce that to five rows: mysql> SELECT (SELECT COUNT(*) FROM world.City) - COUNT(*)

-> FROM world.City WHERE ID <= 5;

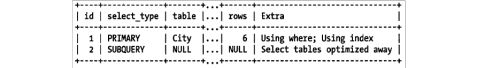

This version reads fewer rows because the subquery is turned into a constant during the query optimization phase, as you can see with EXPLAIN:

A frequent question on mailing lists and IRC channels is how to retrieve counts for several different values in the same column with just one query, to reduce the number of queries required. For example, say you want to create a single query that counts how many items have each of several colors. You can’t use an OR (e.g., SELECT COUNT(color = 'blue' OR color = 'red') FROM items;), because that won’t separate the different counts for the different colors. And you can’t put the colors in the

WHERE clause (e.g.,SELECT COUNT(*) FROM items WHERE color = 'blue' AND color = 'red';), because the colors are mutually exclusive. Here is a query that solves this problem:

mysql> SELECT SUM(IF(color = 'blue', 1, 0)) AS blue,SUM(IF(color = 'red', 1, 0))

-> AS red FROM items;

And here is another that’s equivalent, but instead of using SUM() uses COUNT() and ensures that the expressions won’t have values when the criteria are false:

mysql> SELECT COUNT(color = 'blue' OR NULL) AS blue, COUNT(color = 'red' OR NULL)

-> AS red FROM items;

Using an approximation

Sometimes you don’t need an accurate count, so you can just use an

approximation.The optimizer’s estimated rows in EXPLAIN often serves well for this. Just execute an EXPLAIN query instead of the real query.

At other times, an exact count is much less efficient than an approximation. One customer asked for help counting the number of active users on his website. The user count was cached and displayed for 30 minutes, after which it was regenerated and cached again. This was inaccurate by nature, so an approximation was acceptable. The query included several WHERE conditions to ensure that it didn’t count inactive users or the ”default” user, which was a special user ID in the application. Removing these conditions changed the count only slightly, but made the query much more efficient. A further optimization was to eliminate an unnecessary DISTINCT to remove a filesort. The rewritten query was much faster and returned almost exactly the same results.

More complex optimizations

In general, COUNT() queries are hard to optimize because they usually need to count a lot of rows (i.e., access a lot of data). Your only other option for optimizing within MySQL itself is to use a covering index. If that doesn’t help enough, you need to make changes to your application architecture. Consider summary tables (covered in Chapter 4), and possibly an external caching system such as memcached. You’ll probably find yourself faced with the familiar dilemma, “fast, accurate, and simple: pick any two.”

Optimizing JOIN Queries

This topic is actually spread throughout most of the book, but we’ll mention a few highlights:

? Make sure there are indexes on the columns in the ON or USING clauses. Consider the join order when adding indexes. If you’re joining tables A and B on column c and the query optimizer decides to join the tables in the order B, A, you don’t need to

index the column on table B. Unused indexes are extra overhead. In general, you need to add indexes only on the second table in the join order, unless they’re needed for some other reason.

? Try to ensure that any GROUP BY or ORDER BY expression refers only to columns from a single table, so MySQL can try to use an index for that operation.

? Be careful when upgrading MySQL, because the join syntax, operator precedence, and other behaviors have changed at various times. What used to be a normal join can sometimes become a cross product, a different kind of join that returns different results, or even invalid syntax.

Optimizing Subqueries

The most important advice we can give on subqueries is that you should usually prefer a join where possible, at least in current versions of MySQL. We covered this topic extensively earlier in this chapter. However, “prefer a join” is not future-proof advice,and if you’re using MySQL 5.6 or newer versions, or MariaDB, subqueries are a whole different matter.

Optimizing GROUP BY and DISTINCT

MySQL optimizes these two kinds of queries similarly in many cases, and in fact con-verts between them as needed internally during the optimization process. Both types of queries benefit from indexes, as usual, and that’s the single most important way to optimize them.

MySQL has two kinds of GROUP BY strategies when it can’t use an index: it can use a temporary table or a filesort to perform the grouping. Either one can be more

efficientfor any given query. You can force the optimizer to choose one method or the other with the SQL_BIG_RESULT and SQL_SMALL_RESULT optimizer hints, as discussed earlier in this chapter.

If you need to group a join by a value that comes from a lookup table, it’s usually

more efficient to group by the lookup table’s identifier than by the value. For example, the following query isn’t as efficient as it could be:

mysql> SELECT actor.first_name, actor.last_name, COUNT(*)

-> FROM sakila.film_actor

-> INNER JOIN sakila.actor USING(actor_id)

-> GROUP BY actor.first_name, actor.last_name;

The query is more efficiently written as follows:

mysql> SELECT actor.first_name, actor.last_name, COUNT(*)

-> FROM sakila.film_actor

-> INNER JOIN sakila.actor USING(actor_id)

-> GROUP BY film_actor.actor_id;

Grouping by actor.actor_id could be even more efficient than grouping by

film_actor.actor_id. You should test on your specific data to see.

This query takes advantage of the fact that the actor’s first and last name are

dependent on the actor_id, so it will return the same results, but it’s not always the case that you can blithely select nongrouped columns and get the same result. You

might even have the server’s SQL_MODE configured to disallow it. You can use MIN() or MAX() to work around this when you know the values within the group are distinct because they depend on the grouped-by column, or if you don’t care which value you get:

mysql> SELECT MIN(actor.first_name), MAX(actor.last_name), ...;

Purists will argue that you’re grouping by the wrong thing, and they’re right. A

spurious MIN() or MAX() is a sign that the query isn’t structured correctly. However, sometimes your only concern will be making MySQL execute the query as quickly as possible. The purists will be satisfied with the following way of writing the query: mysql> SELECT actor.first_name, actor.last_name, c.cnt

-> FROM sakila.actor

-> INNER JOIN (

-> SELECT actor_id, COUNT(*) AS cnt

-> FROM sakila.film_actor

-> GROUP BY actor_id

-> ) AS c USING(actor_id) ;

But the cost of creating and filling the temporary table required for the subquery may be high compared to the cost of fudging pure relational theory a little bit. Remember, the temporary table created by the subquery has no indexes.

It’s generally a bad idea to select nongrouped columns in a grouped query, because the results will be nondeterministic and could easily change if you change an index or theoptimizer decides to use a different strategy. Most such queries we see are

accidents (because the server doesn’t complain), or are the result of laziness rather than being designed that way for optimization purposes. It’s better to be explicit. In fact, we suggest that you set the server’s SQL_MODE configuration

variable to include ONLY_FULL_GROUP_BY so it produces an error instead of letting you write a bad query.

MySQL automatically orders grouped queries by the columns in the GROUP BY clause, unless you specify an ORDER BY clause explicitly. If you don’t care about the order and you see this causing a filesort, you can use ORDER BY NULL to skip the automatic sort. You can also add an optional DESC or ASC keyword right after the GROUP BY clause to order the results in the desired direction by the clause’s columns.

Optimizing GROUP BY WITH ROLLUP

A variation on grouped queries is to ask MySQL to do superaggregation within the results. You can do this with a WITH ROLLUP clause, but it might not be as well optimized as you need. Check the execution method with EXPLAIN, paying attention to whether the grouping is done via filesort or temporary table; try removing the

WITH ROLLUP and seeing if you get the same group method. You might be able to force the grouping method with the hints we mentioned earlier in this section.

Sometimes it’s more efficient to do superaggregation in your application, even if it means fetching many more rows from the server. You can also nest a subquery in the FROM clause or use a temporary table to hold intermediate results, and then query the temporary table with a UNION.

The best approach might be to move the WITH ROLLUP functionality into your application code.